Imagine a world without electricity, cars, computers, the internet — a truly bleak place. Or one where each of these things are owned and controlled by one company. That's what our world would look like today if the human knowledge that brought about these innovations had been locked away, never shared openly. None of the benefits of modern technology, science or healthcare would be there for us to move society forward — together — at the rate we have. We would be set back hundreds of years.

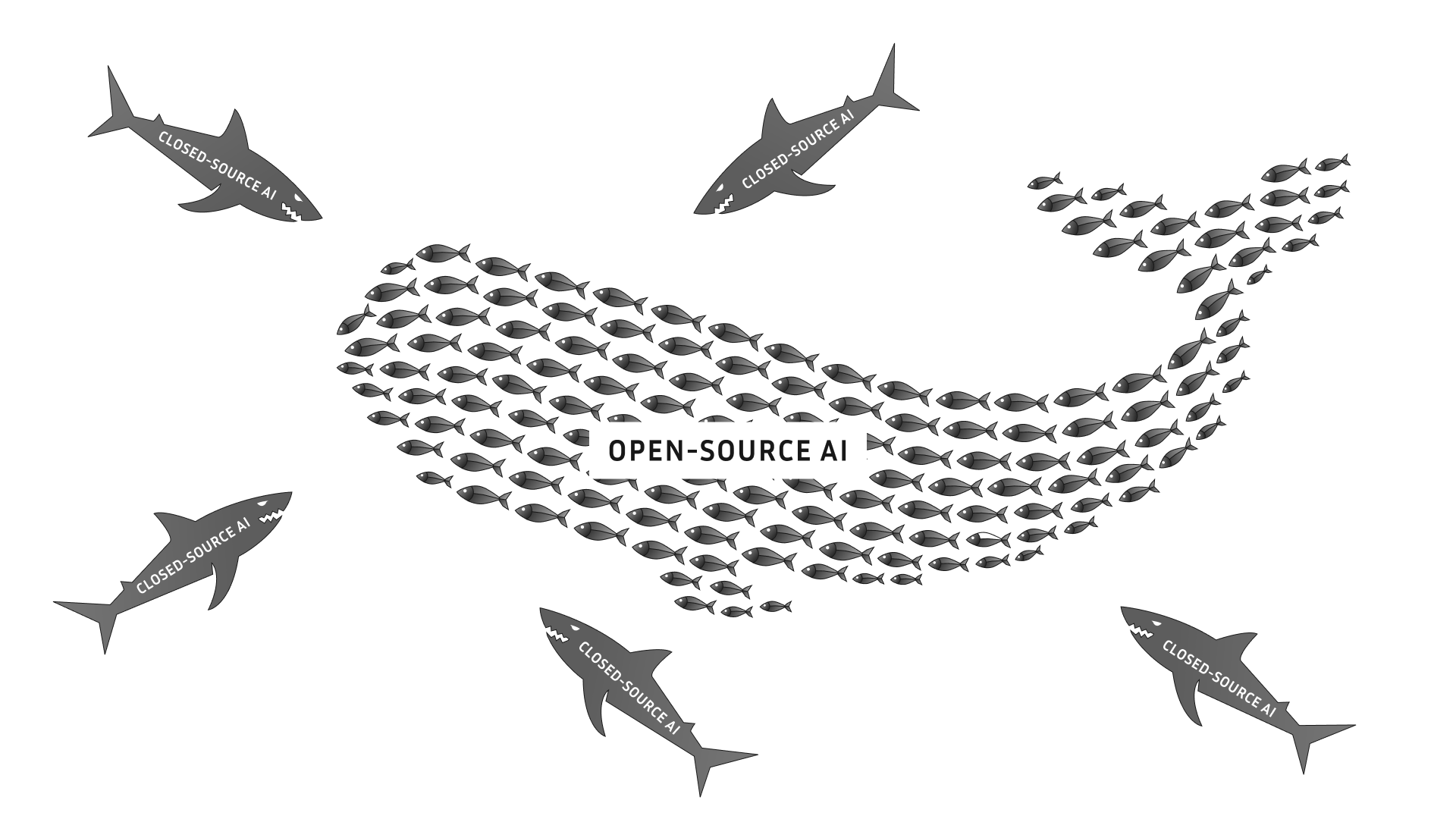

Currently, we're faced with the risk of AI, our most powerful invention, failing us. For the sake of short-sighted commercialization and in complete misalignment with humanity's best interests, the development of this highly complex technology has become increasingly closed. Leaders in the industry have become increasingly secretive, moving further and further away from open knowledge sharing and collaboration. Instead, they are feverishly competing against each other to "win," all while wastefully re-inventing each other's work at enormous human, capital, and environmental cost.

And despite this high cost, they are still failing us. The technology is too complex for any single company to advance effectively on its own, without openly sharing knowledge and collaborating with the rest of the AI community. Yet, these leading AI companies try to arrogantly dissuade others from pursuing this technology. They want you to believe that the future of AI progress is closed, that it is our destiny to kneel at their feet. They want you to pay tribute and let them freely monetize. They want you to believe that they are the chosen prophets that will lead us all to our AI salvation.

This pattern is straight out of the Unix playbook. The major tech players developed their proprietary, closed-source versions of Unix, and those seemed to be the only "possible" and "right" solutions. The argument, or assumption, was that these major tech players were the only ones with the talent, quality and capacity to develop versions of Unix. Then, Linux showed the world how open source was a better approach to developing such a critical and complex technology. Linux gave the crucial flexibility that developers needed to customize it to their needs. It gave them the ownership they needed to avoid getting locked into a closed vendor. It gave them the transparency they needed to fully trust it. All that at a lower cost. As more and more developers adopted Linux, the Linux ecosystem became more mature, safe, versatile and performant than any closed Unix alternative. It became the de facto platform — the platform that is now powering practically all cloud computing and most mobile devices, the internet, and the development of AI itself.

I believe that AI will develop not just in a similar way, but in an even more profound one. If there was ever a technology that should and can thrive in open source, it is AI. Open source AI is not only the best solution for the good of humanity overall, but also for the benefit of every single individual developer and enterprise. At the same time, it is the best path for developing the most competitive version of AI, the one that would dominate the same way Linux did.

AI represents our best opportunity for humanity's advancement. Open source is the best path we have for AI to effectively and safely unlock industry applications and promote scientific discovery.

Foundation models are used across many different tasks, from improving simple traditional scenarios like classification to diverse, higher-level automation tasks. No single AI solution developed by a single company can solve and optimize for all of them at the same time — there is no AI silver bullet. AI has also become critical to advancing the sciences across the board, from healthcare to weather forecasting, from partial differential equation solving to biology. If frontier AI continues down the path of black-box APIs, scientific progress will be needlessly impeded.

Making foundation models safe, unbiased, culturally-inclusive, privacy-preserving, and secure across diverse modalities and tasks is no easy feat, to say the least. No existing big tech company seems to be able to invest enough to solve all these challenges – especially when they are entrenched in one-upping their competitors in the public AI leaderboards, as opposed to focusing on what would benefit the world at large.

Open source not only helps to collectively invest in AI safety, but even to discover the issues that need fixing in the first place. Per Linus' Law, "Given enough eyeballs, all bugs are shallow." It stands to reason that "given enough eyeballs, all AI issues are shallow" too. The open source approach enables the collective review and contributions of a large community, allowing for such issues to be quickly identified and resolved.

There is also, of course, the significant debate on the hypothetical existential risks posed by superintelligent misaligned AI. Regardless of where you stand on this matter and the probability you assign to this possibility, one thing is certain: such challenges can be better solved in the open with broad collaboration as opposed to in secret by a single organization whose top goal is a sprint to monetization.

To put it plainly, the control of such a powerful technology should not be in the hands of a small handful of corporations. This would lead to extreme centralization of power with significant societal, economic, and ethical implications. Negative repercussions have a far greater chance of being mitigated with an open source approach.

As with open source Linux, open source AI has many critical attributes that make it a better choice for developers and organizations:

Organizations need AI that they can trust. AI data is often sensitive — it may contain private personal information and IP. Organizations don't want to trust closed vendors' infrastructure with their sensitive data. They don't want their data stored on the vendor's cloud and processed with non-transparent code. As in the case of Linux, open source can not only be more secure, but it also provides organizations with the flexibility to deploy it on the trusted infrastructure of their choosing.

And regardless of the infrastructure used, organizations often cannot trust the AI itself when it is developed and offered as a black box solution. For full security and transparency, organizations need to understand how it was developed, so that they can anticipate its behavior and better understand how they can improve what matters for their use case specifically. Ultimately, they need more transparency for auditability and compliance.

At the end of the day, closed vendor APIs don't provide enough flexibility for much-needed differentiation. Developers are often initially impressed by the quality of the results they get from calling a closed vendor's API with an LLM prompt honed for their particular scenario — AI has come a long way in the last few years. However, what developers sometimes don't realize, especially when misled by "experts"1, is that overreliance on such limited, closed APIs, could be setting their organization up for eventual failure. For AI-first companies to succeed, they need AI differentiation. That is, what level of differentiation can an organization really achieve with a bespoke prompt, which a competitor could easily replicate?

To differentiate, organizations need to leverage their unique and invaluable troves of data and expertise. And to leverage such data, organizations need AI that is easy to use and gives them full flexibility to adjust it to their needs as they build their AI muscle. Unfortunately, some organizations, for the sake of "convenience," may seem content, lingering in AI's shallow waters: customizing prompts and calling APIs that allow for limited tuning. But such organizations are missing key opportunities for differentiation and will likely find themselves on the wrong side of history. Their competitors likely won't make the same mistake.

Open source solutions that are backed by strong communities can tap on a diverse and deep well of talent. That helps them to often have at the same time richer features, stronger performance and lower cost than any of the closed source alternatives. Linux makes this case perfectly.

Lock-in greatly decreases an organization's flexibility to innovate, while typically increasing its costs. Organizations know that, and thus, they don't want to get locked into a specific closed vendor's model, cloud, and hardware; they want control and flexibility. They also want predictability. It can be very disruptive for an organization when a closed vendor forces changes in the model, related infrastructure, or terms of use.

AI isn't just a team sport: it's a global effort. AI is very complex, with a lot of issues to fix and capabilities to improve: it's worthy of having a global team tackle it. The innovation and experimentation needed can be easily pursued in parallel across a distributed and loosely-coupled large global team, an open community spreading all across the world.

To protect their interests, closed vendors openly brag about how many GPUs they have in their attempt to secure their compute "moat." But, I truly believe that an open community can do at least as well as they can. In fact, I think that it can do a lot better. Certainly compute is needed for AI research, but, especially with pre-training scaling laws hitting a wall, a relatively "small" siloed group of people doesn't stand a chance against a massive open community, when it comes to innovating on new algorithms. Similarly, with post-training, which does not require the same level of experimentation resources, would the siloed group or the large community deliver higher quality, safer, more secure, more reliable, and more versatile models across all the modalities, tasks, and higher level systems and applications?

Imagine there are two horses in the AI race. There is the closed-source horse that tries to do everything by itself in its silo, bearing the full cost for its efforts. And then there is the horse that brings together all the cumulative innovation and compute contributions of a very large global community of academic researchers, developers, and enterprises. Which horse would be faster? Which would be more cost-efficient? Which one would you bet your business on as a developer or decision-maker? Which one would you bet on as an investor?

I would bet on open-source outpacing closed-source AI every time.

While there are several examples of complex technologies in modern history that were advanced better in open source than in closed source, the future of AI won't just fix itself — it needs everyone's help. The future of operating systems didn't fix itself: Linus Torvalds released Linux, and then the community joined in.

AI needs its "Linux" moment to thrive: a complete open-source platform with all the required development tools propelled forward by the open community. Open-weight models developed in a silo by Meta2, DeepSeek, Alibaba, and others won't cut it. The three components (open data, open code, open weights), as identified by OSI, are not sufficient either. For AI to succeed, it also needs to have "open collaboration", and for that, AI needs a platform. Access to the data, code, and trained model is of limited value, if there is a barrier for others in the community to experiment, build upon it, and contribute.

Collaboration must be the lifeblood of this "Linux" for AI. The ability to reproduce and build on top of others' contributions, across the full spectrum of frontier foundation model development, is a must. Collaboration needs to be the priority, or open source AI will fail to fulfill its promise.

Until now, the right AI collaboration didn't exist, so we started building a platform, Open Universal Machine Intelligence (Oumi), alongside academics from 13+ universities. We started building it to support foundation model research and to enable the community to effectively work together. The Oumi platform supports pre-training, tuning, data curation/synthesis, evaluation, and any other common utility, in a fully recordable and reproducible fashion, while being easily customizable to support novel approaches. It supports all common open models (Llama, QWEN, Phi, Mistral) and any platform, with seamless experiment transition from one's laptop to any cloud (AWS, Azure, GCP, Lambda) or on-prem cluster. Regardless of where you get your compute, you can still experiment together with anyone in the community and collaborate.

With the right AI collaboration platform in place, the community is enabled to develop foundational models collaboratively. We will be starting with the post-training of existing open models, which represent an excellent opportunity for the open community to advance the state of the art: sign up here if you are interested in participating. Next up will be collaboratively pursuing improvements to pre-training.

For AI to succeed, we can't work in silos. We need to all work together and be on the same team. If we work together, we will succeed, and open source AI will fulfill its promise. Every single organization and every single one of us will then greatly benefit. If not… well, let's just all make sure we build the right future for AI, together. The alternative is simply unacceptable.

What future do we want for AI and what will we do about it?

Let's start building it here… 🚀🚀🚀

- Manos

CEO, Oumi

1 An Anthropic representative strongly suggested to me at AWS re:Invent (Dec' 24) that I don't need to train models and that curating a prompt is all I need. I can't overstate how wrong this self-serving argument is — note that Anthropic doesn't currently provide any tuning APIs.

2 I still applaud and greatly thank Meta and others for their open-weight model efforts. They go way beyond what their peers are doing for the open community.